Materialization#

Querona supports multiple modes of materialization of your data, configured per view.

Materialization (caching) means that for query processing, Querona uses data from the materialized copy (cache) instead of reaching out to the data source. It is especially efficient to cache data that is time and resource consuming to gather. On the other hand, caching means that data is only as current as of last cache load.

Newly configured materialization is not initialized. Depending on the mode used, materialization uses one of the following:

lazy, initialized at first call for data,

on-demand, uses a scheduled job or manual stored procedure call.

A partition is a physical division of data into distinct, independent parts using some consistent rule (partitioning key). In other words, data is stored and processed in blocks called partitions.

Partitioning is one of the most widely used techniques to optimize physical data layout. It provides a coarse-grained index for skipping unnecessary data reads when queries have predicates on the partitioned columns.

Partitioning may substantially increase performance when:

Processing can skip partitions; for example, billing data is partitioned by the country code, and query requires processing data for one particular country. In that case, only one partition will be processed.

Processing can be parallelized; for example, query requires grouping by country, and that can be done in parallel for each country partition.

The number of distinct values in each column is less than tens of thousands.

Basic usage#

The newly configured view materialization is not initialized - no data copy exists.

In-memory materialization populates cache on the first usage (lazy initialization). To make sure view is materialized, consult ‘Cached’ column on ‘Tables & views’ page.

The following scenario assumes that Apache Spark is used as a virtual database back-end.

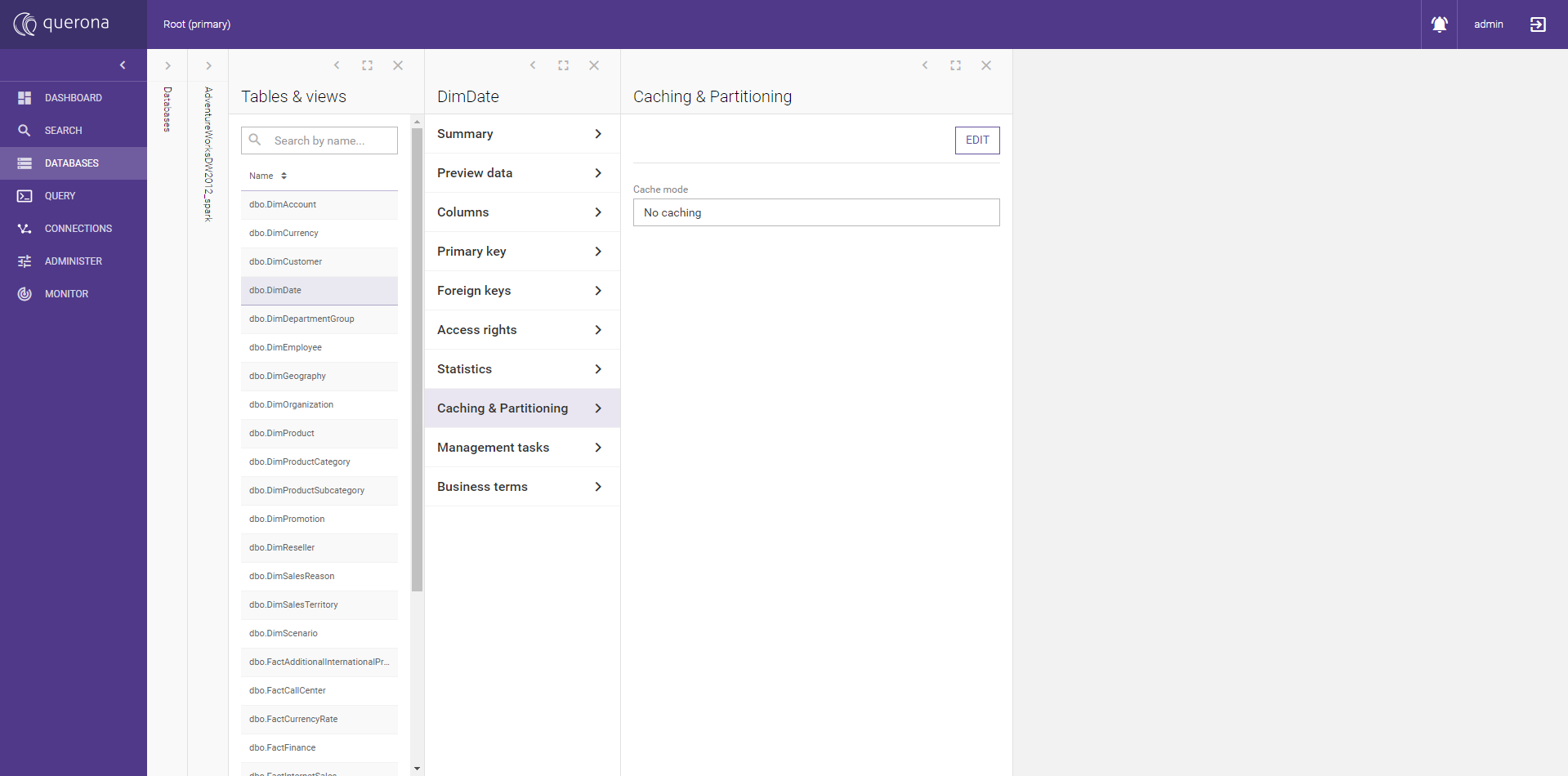

Go to , select database and then the view for which you wish to configure materialization.

While in view details, go to tab.

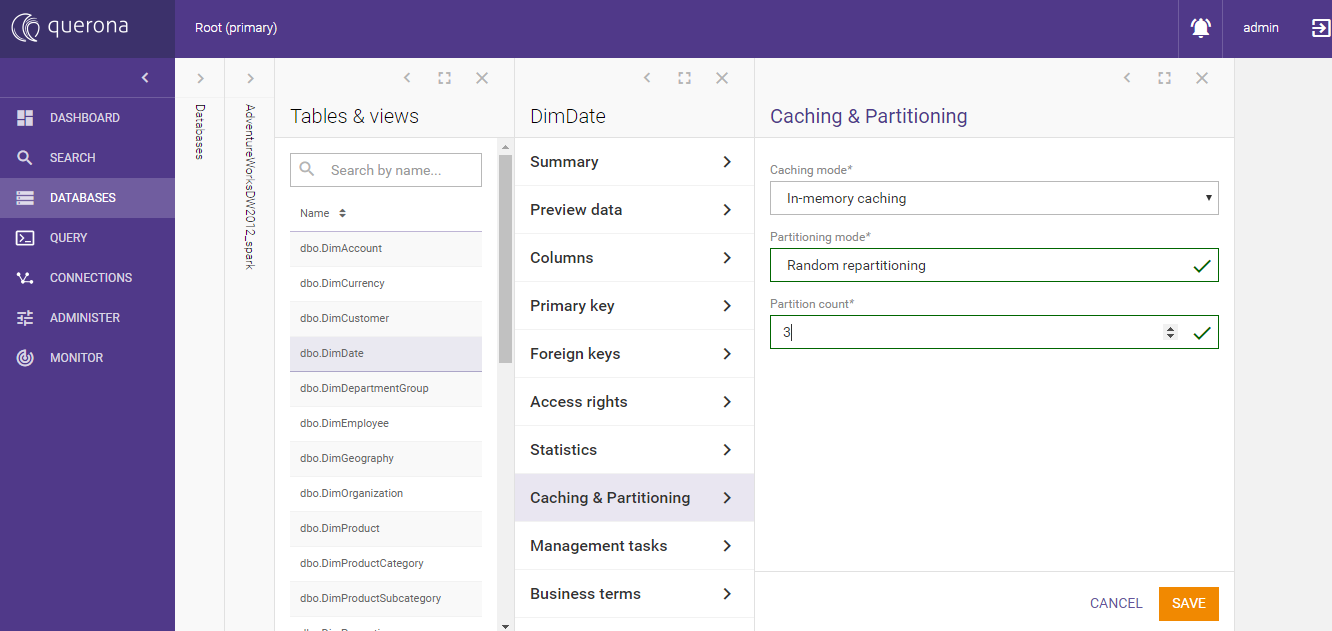

Click Edit and select caching mode, then partitioning mode if necessary or ‘No partitioning’.

Click Save. Materialization is now configured, but the cache is not yet populated.

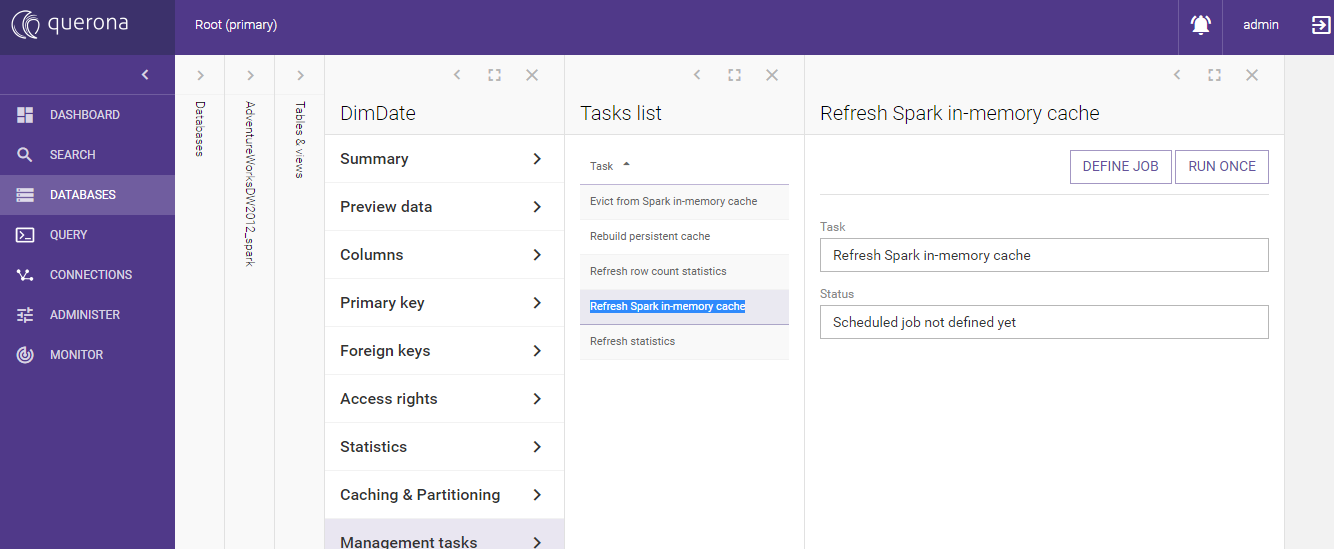

Go to Management Tasks tab.

6. If the persistent cache was selected, click Rebuild materialized view. If the in-memory cache was selected, click Refresh Spark’s memory cache, then click Run once button, to populate the cache. Strictly speaking, the in-memory cache would be reloaded on the first usage if it was not loaded previously.

After a while, Querona will confirm that view was materialized.

8. You can review caching state on Tables & views tab. Optionally, instead of manually materializing the view, you can set up a job that will materialize the view according to the selected schedule.

Instead of clicking Run once as in 6, click Define job.

After the job is created, go to Schedules tab.

Click Add schedule and select one of the available schedules. The selected schedule will appear on the list.

The materialized view will be automatically refreshed according to the selected schedule.

Materialization strategies#

Materialization is available for views defined in virtual databases, which as a back-end use a supported database engine. In-memory cache modes are available only for engines that support caching data in memory (eg. Apache Spark.)

In-memory caching#

Data is stored in RAM. Access times may be better, compared to the persistent materialization. On the other hand, when Spark process exits, the in-memory cache is lost and will be initialized again on first use.

Persistent caching - one table#

Data is stored in a single table. Table format may differ depending on what data processing system was used as virtual database back-end.

Persistent caching - two rotated tables#

Working set of data is stored on disk in a single table, but there is also second ‘buffer’ table that is used as insert target when the data is reloaded. When reloading data, _A and _B tables are both present on disk doubling peak storage required. On the other hand, materialization does not interfere with current queries.

Persistent caching - one table, in-memory#

Data is stored both in-memory and on-disk. When Spark is restarted, cached data can be loaded from the local storage.

Persistent caching - two rotated tables, in-memory#

The combination of in-memory and persistent rotated caching. It takes most space, both disk, and RAM, but potentially offers the best performance of in-memory processing while still being persistent and able to reload in the background.

In the case of Spark, table compression may be in effect. Disk and RAM representations may differ.

Index propagation#

When the given view has an index defined, the caching mode is set to Persistent, and the underlying provider supports creating indexes, then the index is created and built on the physical data store. This might significantly speed up query execution.

A new index can be defined as well, but propagating it to the underlying provider requires the cache to be recreated.

Note

For more information about indexes see Indexes.

Partitioning#

Partitioning is only available for views cached using data processing systems that support it, eg. Apache Spark. Available partitioning modes depend on selected materialization strategy.

Note

Take into account that creating too many partitions (for 10x or 100x times more than available cores) can severely hinder performance. On the other hand, too little partitions (for example 1/10th of available cores) can limit potential parallel processing.

In-memory partitioning modes#

Partitioning mode |

Description |

|---|---|

Random repartitioning |

Rows will be randomly allocated to all partitions. |

Linear column partitioning |

Rows will be allocated to partitions based on the selected column value. The number of partitions and min/max values of supported partition keys is configurable. |

Column value based |

Instead of relying on auto-division of rows, you specify particular values of the partition key for each partition. |

Column value ranges based |

This mode is extended version of column value based mode. Instead of selecting a single value for each partition, you can choose to use the whole range. |

Predicate-based |

Most flexible, but also most complicated mode. For each partition, you provide handwritten SQL predicate using views columns. Rows that satisfy predicate will fall into given partition. Note that user is responsible for making sure predicates are valid Spark SQL and are useful predicates for partitioning. |

Persistent caching#

You select set of columns over which partitioning will be enforced.

Advanced configuration#

The advanced configuration allows users to fine-tune the settings of a cache table that stores the data supporting the view.

Table storage format#

The table storage format controls the physical format of the table. The following modes are supported for Spark:

Format |

Description |

|---|---|

Default |

The format of a table is taken from the default configuration of the Spark instance used. Usually ORC or Parquet. |

Parquet |

Parquet, an open-source file format for Hadoop stores nested data structures in a flat columnar format. Compared to a traditional approach where data is stored in a row-oriented approach, parquet is more efficient in terms of storage and performance. It is especially good for queries that read particular columns from a wide (with many columns) table since only needed columns are read and IO is minimized. |

ORC |

The Optimized Row Columnar (ORC) file format provides a highly efficient way to store data. It was designed to overcome the limitations of other file formats. It ideally stores data compact and enables skipping over irrelevant parts without the need for large, complex, or manually maintained indices. The ORC file format addresses all of these issues. ORC stores collections of rows in one file and within the collection the row data is stored in a columnar format. |

JSON |

JavaScript Object Notation (JSON) is an open standard file format, and data interchange format, that uses human-readable text to store and transmit data objects consisting of attribute-value pairs and array data types (or any other serializable value). |

CSV |

A comma-separated values (CSV) file is a delimited text file that usually uses a comma to separate values. Each line of the file is a data record. Each record consists of one or more fields, separated by a delimiter. |

Delta |

As of Spark v3, the Delta format is supported. Delta Lake is an open-source storage layer that brings ACID transactions to Apache Spark. |

Parquet settings#

The following Parquet-specific settings are supported when using Parquet format:

Key |

Default value |

Setting Notes |

|---|---|---|

compression |

snappy |

Compression codec to use when saving to file. This can be one of the known case-insensitive shorten names(none, uncompressed, snappy, gzip, lzo, brotli, lz4, and zstd). |

ORC settings#

The following ORC-specific settings are supported when using ORC format:

Key |

Default value |

Setting Notes |

|---|---|---|

orc.compress |

ZLIB |

Compression type (NONE, ZLIB, SNAPPY, LZO). |

orc.compress.size |

262,144 |

Number of bytes in each compression block. |

orc.stripe.size |

268,435,456 |

Number of bytes in each stripe. |

orc.row.index.stride |

10,000 |

Number of rows between index entries (>= 1,000). |

orc.create.index |

true |

Sets whether to create row indexes. |

orc.bloom.filter.columns |

not set |

Comma-separated list of column names for which a Bloom filter must be created. |

orc.bloom.filter.fpp |

0.05 |

False positive probability for a Bloom filter. Must be greater than 0.0 and less than 1.0. |

JSON settings#

The following JSON-specific settings are supported when using JSON format:

Setting |

Default value |

Setting Notes |

|---|---|---|

compression |

null |

Compression codec to use when saving to file. This can be one of the known case-insensitive shorten names (none, bzip2, gzip, lz4, snappy and deflate). |

dateFormat |

yyyy-MM-dd |

Sets the string that indicates a date format. Custom date formats follow the formats at Datetime Patterns. This applies to date type. |

timestampFormat |

yyyy-MM-dd’T’HH:mm:ss[.SSS][XXX] |

Sets the string that indicates a timestamp format. Custom date formats follow the formats at Datetime Patterns. This applies to timestamp type. |

encoding |

not set |

Specifies encoding (charset) of saved json files. If it is not set, the UTF-8 charset will be used. |

lineSep |

\n |

Defines the line separator that should be used for writing. |

ignoreNullFields |

true |

Whether to ignore null fields when generating JSON objects. |

CSV settings#

The following CSV-specific settings are supported when using CSV format:

Setting |

Default value |

Setting Notes |

|---|---|---|

sep |

, |

Sets a single character as a separator for each field and value. |

quote |

“ |

Sets a single character used for escaping quoted values where the separator can be part of the value. If an empty string is set, it uses u0000 (null character). |

escape |

\ |

Sets a single character used for escaping quotes inside an already quoted value. |

charToEscapeQuoteEscaping |

escape or 0 |

Sets a single character used for escaping the escape for the quote character. The default value is escape character when escape and quote characters are different, 0 otherwise. |

escapeQuotes |

true |

A flag indicating whether values containing quotes should always be enclosed in quotes. Default is to escape all values containing a quote character. |

quoteAll |

false |

A flag indicating whether all values should always be enclosed in quotes. Default is to only escape values containing a quote character. |

header |

false |

Writes the names of columns as the first line. |

nullValue |

empty string |

Sets the string representation of a null value. |

emptyValue |

“” |

Sets the string representation of an empty value. |

encoding |

not set |

Specifies encoding (charset) of saved csv files. If it is not set, the UTF-8 charset will be used. |

compression |

null |

Compression codec to use when saving to file. This can be one of the known case-insensitive shorten names (none, bzip2, gzip, lz4, snappy and deflate). |

dateFormat |

yyyy-MM-dd |

Sets the string that indicates a date format. Custom date formats follow the formats at Datetime Patterns. This applies to date type. |

timestampFormat |

yyyy-MM-dd’T’HH:mm:ss[.SSS][XXX] |

Sets the string that indicates a timestamp format. Custom date formats follow the formats at Datetime Patterns. This applies to timestamp type. |

ignoreLeadingWhiteSpace |

true |

A flag indicating whether or not leading whitespaces from values being written should be skipped. |

ignoreTrailingWhiteSpace |

true |

A flag indicating defines whether or not trailing whitespaces from values being written should be skipped. |

lineSep |

n |

Defines the line separator that should be used for writing. Maximum length is 1 character. |