Instance configuration#

The are two models of connecting and working with Apache Spark:

using a local managed instance(s),

using an existing cluster (e.g. Azure HDInsight).

We can configure and run as many instances as needed, as long as they use different listening ports.

Note

To communicate with Spark, Querona uses a Driver, which is a Java application acting as a proxy, delegating incoming requests to and from Spark. Once started, the Driver listens for incoming connections from Querona.

Incoming requests are authenticated using an API-key. When required, the Driver opens the reverse connections to Querona via TDS, using the authentication method set on the Spark connection.

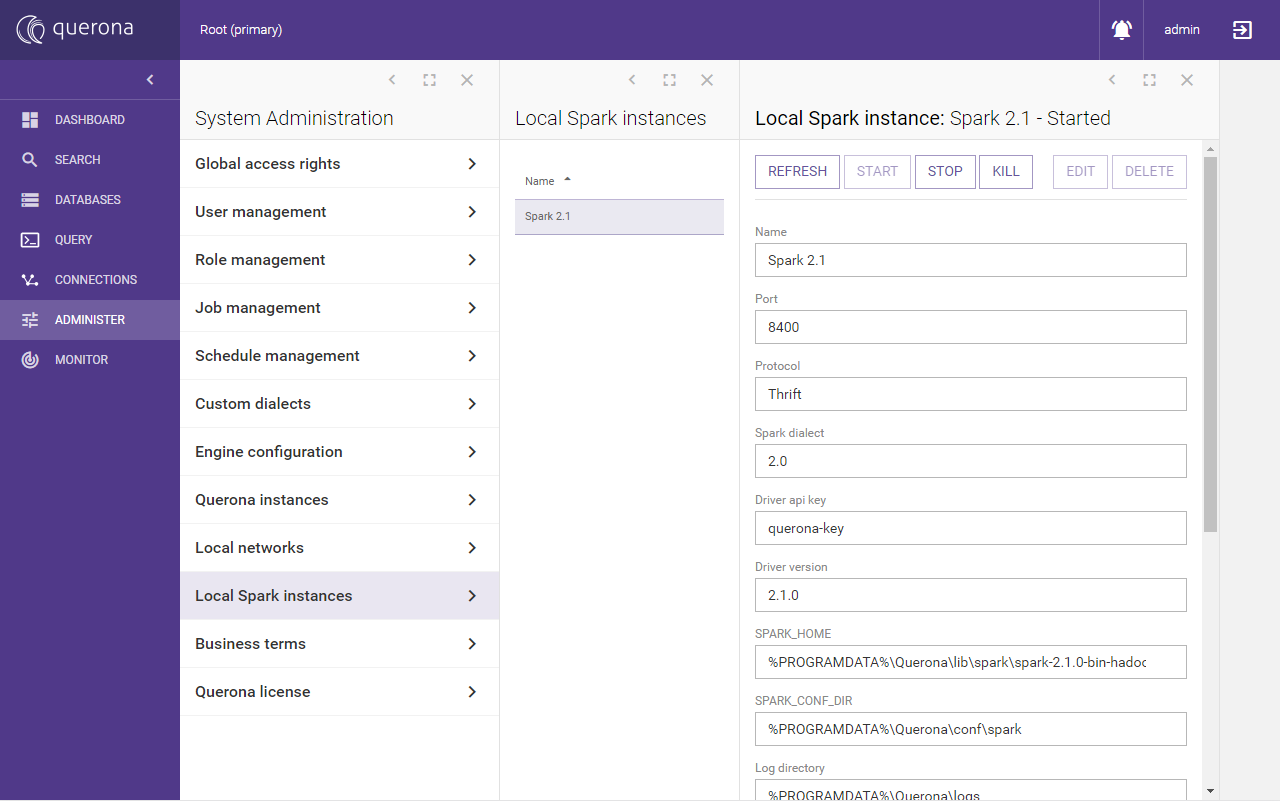

Local Instance Configuration#

Navigate to .

Select the default Spark instance, usually found under name like “Spark 3.x”.

The following configuration settings can be set for a given instance:

Parameter |

Description |

Default value |

|---|---|---|

Name |

A friendly name of the instance e.g. Spark 3 |

|

Port |

The port that the driver will open to communicate with Querona. |

8400 |

Protocol |

The protocol that the driver will use to exchange data with Querona. Possible values: Thrift, http |

Thrift |

Spark dialect |

The dialect version used to communicate with the driver. Possible values: 2.0, 3.0. |

|

Driver API key |

The security key used to authenticate incoming requests - this must match the key given when configuring the connection to Spark. Using the default value is discouraged. |

querona-key |

Driver version |

The version of the driver to be deployed to the instance - should match the used Spark version. |

3.0.1 |

Delta Lake version |

The version of Delta Lake libraries to use. Each Delta Lake version is compatible with one or more Spark versions. Setting to Auto instructs Querona to infer the latest compatible Delta Lake version from Spark binaries. Setting to None disables loading of Delta Lake libraries. For more information about Delta Lake and Spark compatibility see io.delta releases at. |

3.0 (Spark 3.5) |

SPARK_HOME |

The root directory of Spark distribution used. |

%PROGRAMDATA%\Querona\lib\spark\spark-3.5.5-bin-hadoop3 |

SPARK_CONF_DIR |

The directory where Spark configuration is being stored |

%PROGRAMDATA%\Querona\conf\spark |

Log directory |

The directory where Spark log files are being generated. No spaces allowed. See also: Disable Log Tracing |

%PROGRAMDATA%\Querona\logs |

Spark master |

Spark Master URL |

local[*] |

Driver memory |

Amount of memory to use for the driver process. |

4g |

Executor memory |

Amount of memory to use per executor process |

4g |

Total executor cores |

The number of cores to use on each executor |

4 |

_JAVA_OPTIONS |

Value of _JAVA_OPTIONS variable picked up by Spark. Note: setting too low like “-Xms1G” might trigger the following startup error: “‘Initial’ is not recognized as an internal or external command” |

|

Autostart |

Sets whether the given instance should be automatically started together with Querona. |

False |

Persistent |

Sets whether the given instance should be left alive even the master Querona process is stopped. |

False |

Enable Log Tracing |

Checking this flag will cause Querona to start tracing and forwarding Spark log entries. It’s useful when using a logging software such as SEQ, to have all logs in one place. |

False |

Advanced settings#

Querona stores a few configuration files in C:\ProgramData\Querona\conf\spark (by default).

File |

Description |

|---|---|

core-site.xml |

Querona-specific HDFS configuration |

hive-site.xml |

Querona-specific Hive configuration |

querona-site.xml |

Querona configuration parameters |

spark-env.cmd |

Spark environment variables configuration |

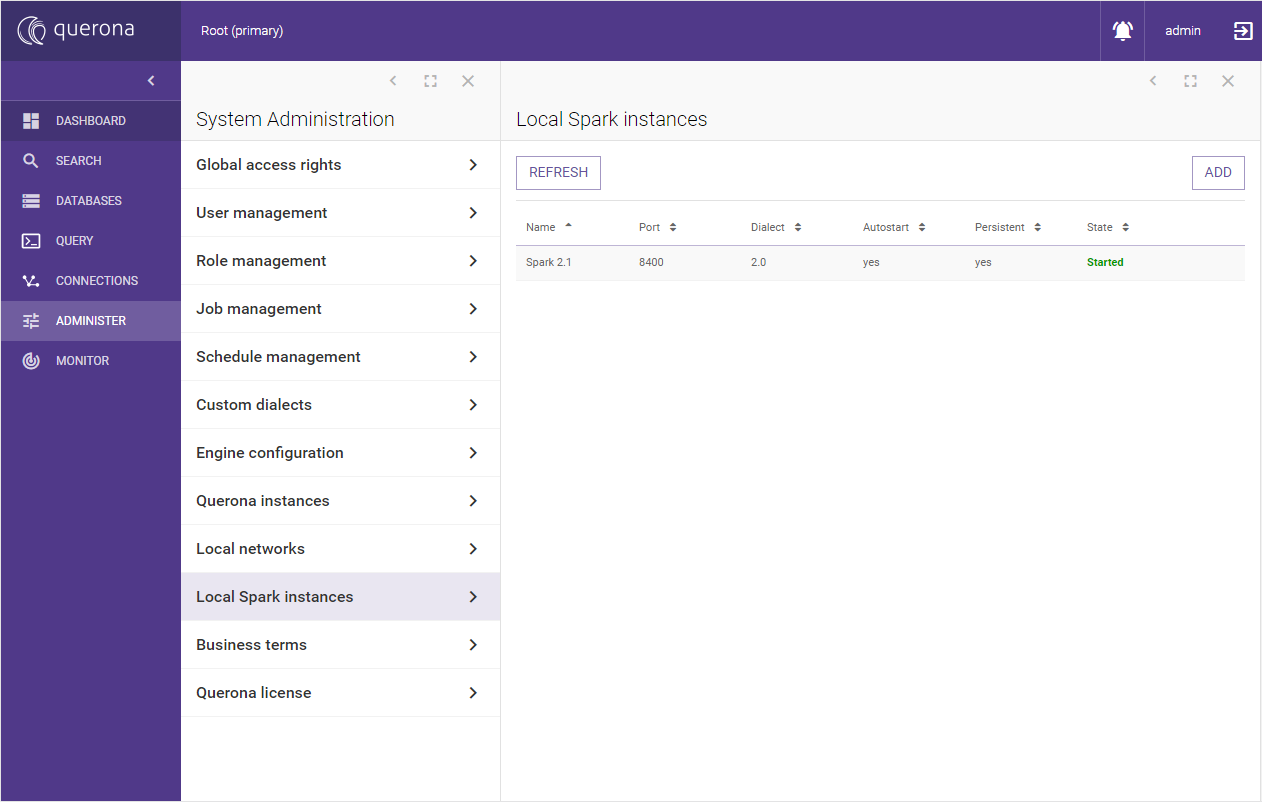

Adding a managed instance#

Navigate to .

To add a new local Spark instance:

Click the Add button

Uniquely name your instance

Set values of the required settings and Save

To access your new instance, you need to create a Connection configuration targeting it.